Reaching external resources from a Service Fabric cluster is trivial, whereas reaching the cluster from the internet requires some configuration. The virtual machine scale set, service endpoint, and load balancer come into play. At first sight, it might seem as complicated as doing a puzzle, but understanding the mechanisms under the hood helps to realize that the whole process is easy.

Cluster setup

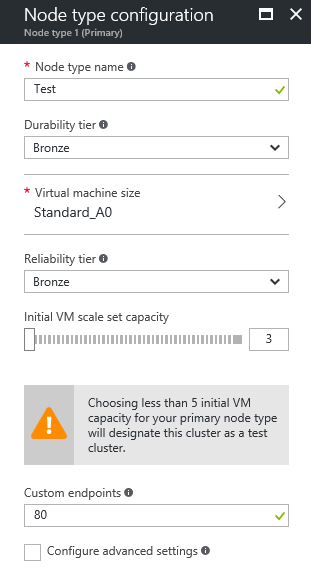

Let’s make a simple Service Fabric service that is reachable from a web browser and reports some information about the state of its computation. To do this, it’s necessary to fill in port number 80 (which is the port of the HTTP protocol) in the custom endpoints section of the node type configuration of the Service Fabric cluster.

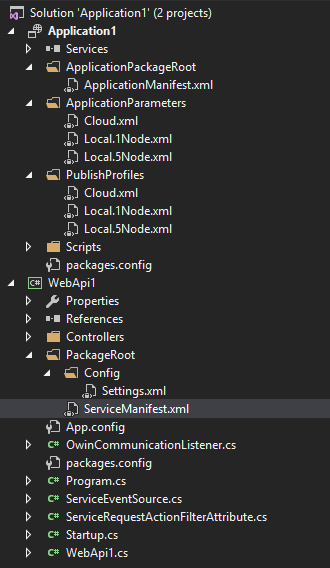

At this time, we will choose Stateless Web API. (This may not be the right choice for a computation service, but the focus of this example is on networking.) There are many configuration XML files in the solution. The most important of them (at this time) is ServiceManifest.xml.

Don’t make any changes; debug the application first and load the address http://localhost:19080/. You will see a Service Fabric Explorer. Then publish your app to the cluster and open the address http://

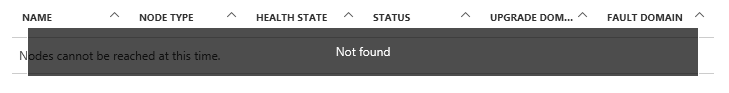

Service Fabric Explorer visibility

The Load balancer has a setting called Load balancing rules.

There are two rules that forward TCP ports 19000 and 19080 to all nodes of the Test node type. If you delete those rules, the Service Fabric Explorer will not be accessible from the internet. However, this also means that the Azure Portal will not be able to show the cluster state.

This means that if you wish to have visibility of your cluster via the Azure Portal, then your load balancer must expose a public IP address and your Network Security Group must allow incoming traffic on port 19080. This is a temporary limitation. Configuration of a publicly inaccessible cluster without any loss of management portal functionality will be possible in the upcoming months.

Health probes & Load balancing rules

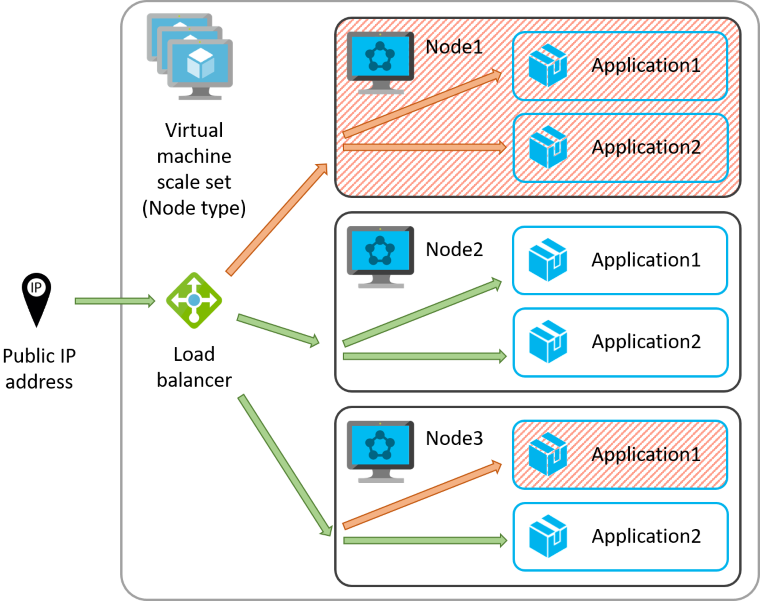

The following image describes the network scheme of the cluster.

The cluster contains only one node type (called Test). Every node type is essentially a separate virtual machine scale set. The load balancer distributes the traffic to particular node instances in accordance with the Round-robin algorithm. When a node or application becomes unhealthy, the load balancer stops sending traffic there. The load balancer takes advantage of health probes. Health probes actively check individual endpoints and inform the load balancer about available healthy endpoints. When all instances are unhealthy, the connection times out. This is why setting up all endpoints correctly is not enough; all probes must be set up and running as well.

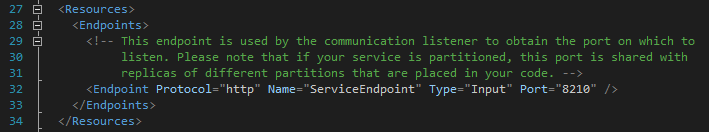

First, look up the ServiceManifest.xml file in the PackageRoot folder in the WebApi1 project and find the service endpoint (ServiceManifest / Resources / Endpoints). There is the port number of the HTTP protocol, which in this case is 8210.

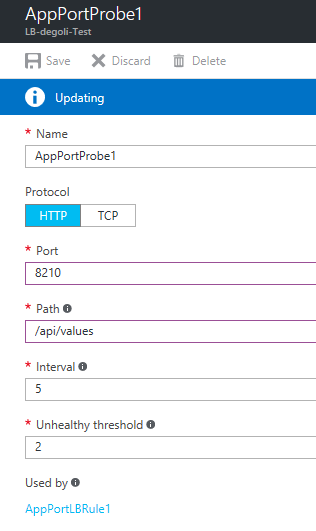

Second, configure the load balancer in your Service Fabric cluster. It is unreachable from the cluster panel. You must list all resources in the resource group and find the load balancer there. In the load balancer panel, select Health probes and configure the AppPortProbe1 probe. Set the protocol to HTTP, change the port to the number found in the ServiceManifest.xml file, and set up the correct path. The path is a URL that is called by the probe. If your application responds with an HTTP 404 status code to the probe’s request, the application will be considered unhealthy regardless of its actual state. Lastly, click Save.

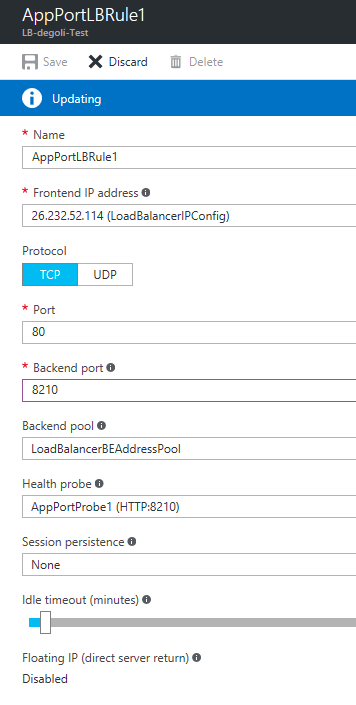

Third, select Load balancing rules on the load balancer panel and configure the AppPortLBRule1 rule. Change the backend port to the number found in the ServiceManifest.xml file and click Save.

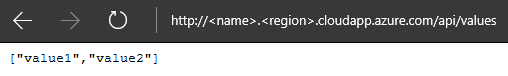

Finally, open the URL of your Service Fabric cluster (http://<name>.<region>.cloudapp.azure.com/api/values) in the browser. You will be connected via the load balancer to one of the three instances of your WebAPI1 service. The service responds according to the logic coded in the ValuesController class.

The path called by the load balancer’s probe should not respond with any data but should return a success or error status code only. The probe’s interval must be short to allow the load balancer to react quickly to application failures. The application cannot be stressed just by probe calls.

This article has covered load balancer settings for a stateless reliable service partitioned as a singleton (one primary replica). Partitioned or replicated services rely on the Service Fabric Naming Service. This topic will be covered in another article.